Operation Overload’s underwhelming influence and evolving tactics

17 July 2025

Operation Overload, also referred to as Matryoshka and Storm-1679, is a Russian-aligned information operation that impersonates credible sources to sow confusion and undermine public trust in European and American institutions. It posts manipulated videos and images, often branded with stolen media logos, across X (formerly Twitter), Bluesky, TikTok and Telegram. The operation also directly contacts researchers and fact checkers, likely to distract them from more pressing investigations or to generate coverage that increases its perceived influence.

This Dispatch provides an update on Operation Overload’s activities in the second quarter of 2025. ISD reviewed a sample of more than 200 X accounts and dozens of Bluesky and TikTok accounts that analysts attributed to the operation with high confidence. While the operation expanded and streamlined its efforts, we found that social media platforms removed a large majority of its posts, limiting its ability to reach and influence users.

Given its limited impact and the tendency of information threat actors to exploit media attention, ISD recommends reporting on Operation Overload in the future only if it gains notable organic engagement, shifts tactics, or large datasets reveal patterns that could help platforms and users detect its content.

Key findings

- Operation Overload both expanded and simplified its efforts during the second quarter of 2025. It began posting on TikTok and made misleading claims about more countries, while also posting more frequently in English and concentrating on media impersonation. It also prioritized longer-term influence campaigns over election interference, targeting countries that have traditionally been in the crosshairs of Russian influence operations, like Ukraine and Moldova, more frequently than countries that held elections during the monitored period.

- Social media platforms appear to have stepped up efforts to remove Operation Overload content, limiting its reach and impact. X removed 73 percent of sampled posts, compared to just 20 percent in the first quarter of 2025. On TikTok and Bluesky, removal rates were higher than 90 percent. This could reflect platforms’ increasing awareness of the operation or that its use of bots and other manipulation tactics is brazen enough to trigger automated moderation systems. ISD analysts did not see notable organic engagement among the remaining posts.

- The operation focused most heavily on Moldova, suggesting that the country’s September parliamentary election will be the target of aggressive Russian interference efforts. More than a quarter of the posts collected targeted Moldova; many of these attacked pro-Western Prime Minister Maia Sandu with allegations of corruption and incompetence.

Methodology

ISD identified roughly 300 accounts linked to Operation Overload across X, Bluesky and TikTok that were active in the second quarter of 2025. We surfaced these accounts by searching for content tied to narratives, countries and organizations frequently targeted by Russian information operations. Attribution was based on a range of operational indicators, including account creation dates, the use of AI-generated voiceovers and stolen logos in posted videos, the appearance of QR codes or puzzle pieces in posted images, and interactions with suspected bot networks. While not exhaustive, the sample is broad and representative enough to reveal key trends in the operation and give an indication of how it may evolve going forward.

ISD did not collect Telegram channels, since Operation Overload’s posts on that platform often appear intended for Russian-speaking audiences rather than foreign interference. A recent comprehensive report from CheckFirst and Reset details how Operation Overload uses hundreds of Telegram channels to distribute content among these specific audiences.

New tactics and targets

While Operation Overload continued its wide-scale impersonation efforts, it shifted tactics in the second quarter of 2025. Based on analysis of data from X (excluding identical posts on other platforms to reduce duplication), we found that 70 percent of posts attempted to impersonate media organisations, up from 50 percent last quarter. Euronews, the BBC and Deutsche Welle (DW) were the primary targets.

Conversely, the volume of content impersonating individuals, such as journalists or academics, dropped by 30 percentage points, falling to just 7 percent of all posts. The operation also concentrated its language use: English posts made up 96 percent of content, compared to 69 percent in the previous quarter. This tactical shift likely reflects an effort to accelerate and simplify content production.

The operation also expanded to TikTok in late May, signaling an intent to reach audiences beyond the news-focused communities generally active on X, Bluesky and Telegram. Between May 26 and June 30, ISD identified more than 60 TikTok accounts linked to the operation.

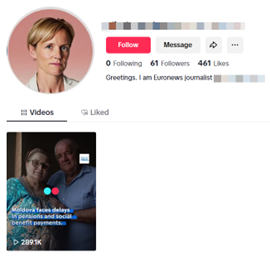

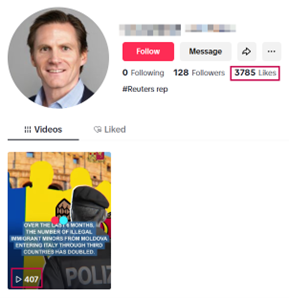

As on Bluesky, Operation Overload’s TikTok accounts posed as members of the media. More than half claimed to work for Euronews. Others pretended to be journalists at outlets including Reuters, Politico and France24. The accounts also appeared to use AI-generated profile images to boost the credibility of the profiles, most of which looked like professional headshots.

Image 1. Operation Overload TikTok account with nearly 300,000 views posing as Euronews journalist.

Image 2. Operation Overload TikTok account with no engagement posing as a Reuters journalist.

The operation’s geographic targets also shifted. Although it mentioned more countries in the second quarter, nearly half of its posts focused on Ukraine and Moldova. Additionally, unlike its aggressive attempts to spread false claims ahead of Germany’s federal election in February, the operation made only minimal attempts to influence discourse around elections in Poland and Romania during the second quarter. This suggests a more selective approach to election interference and a renewed emphasis on longer-term influence campaigns targeting countries that have traditionally been central to Russian operations.

A message for Moldova

Operation Overload targeted Moldova more aggressively than any other country throughout the second quarter, despite barely mentioning the country at all in the first quarter of the year. The operation’s narratives focused primarily on criticizing President Maia Sandu and spreading inaccurate statements about the country’s economic conditions and military readiness.

The attacks on Sandu reflect Russia-linked influence operations’ broader focus on eroding public trust in democratic institutions in Moldova, amplifying local grievances and manipulating elections to derail its path towards EU accession. While these operations have been extensive, historically they have been predominantly deployed offline via vote buying, organized transport to polls, fraudulent voting, and voter intimidation. Operation Overload’s recent targeting of Moldova represents a potential precursor to other Russian efforts to manipulate the September parliamentary elections.

The operation impersonated dozens of organizations in posts targeting Moldova. While 59 percent of those were media outlets, the operation also impersonated researchers, the Orthodox church, hacktivists and law enforcement. The top three impersonated organizations were Euronews, Bellingcat, and DW.

Image 3. Operation Overload post on X spreading false information about President Sandu.

In terms of narratives, the operation portrayed Moldova as a corrupt, chaotic and vulnerable state teetering on the edge of collapse. Much of the focus was on Sandu herself, who was depicted as dishonest and unpopular among both her constituents and EU officials. Posts also depicted Moldova as a country overrun by criminals and members of the LGBTQ+ community. In terms of national security and defense, the operation also cast aspersions about Moldova’s military readiness and Romania’s intent to annex the country.

However, despite the high volume of content targeting Moldova, analysts found little evidence of genuine engagement with Operation Overload posts on the topic. It is possible these efforts were hampered by quick response times from the platforms. Most of the posts were removed during the analysis period: Bluesky and TikTok removed over 90 percent of Moldova-related posts in our sample, while X removed 75 percent.

Minor modifications, insignificant impact

Despite the operation’s sophistication and evolution, it appears to have failed in two of the key objectives of information operations: engaging real users and evading platform detection while doing so. X removed 73 percent of sampled posts, while Bluesky and TikTok took down more than 90 percent. The high removal rate may reflect that platforms have increased investigative efforts. Alternatively, it is possible that the operation has become predictable enough to trigger automated moderation systems. Regardless, platforms’ ability to identify and remove the operation’s content is likely to have curtailed its reach and influence.

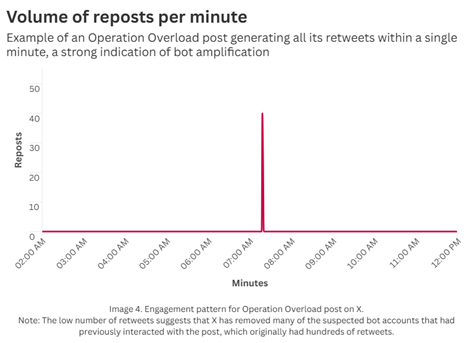

ISD reviewed a sample of posts still available online and did not find evidence of substantial organic engagement. As indicated in previous ISD investigations into Operation Overload, it is likely to have used bots on X and TikTok to drive most (if not all) interaction metrics. On X, the operation appears to use two distinct bot networks, one of which synchronizes all reposts within the same minute and another which does one single repost per minute across an hours-long timeframe. Some TikTok accounts in the sample had more likes than views, which is usually an indicator of artificial engagement. One outlier TikTok account posted twice but received no followers, views, or likes, suggesting that Operation Overload’s amplification tactics are applied unevenly and its engagement metrics depend on manipulation. There was no bot activity detected on Bluesky, where posts averaged less than one like each.

Image 4. Operation Overload post on X generating all reposts within a single minute. The limited number of retweets reflects the amount of apparent bots X has removed that previously engaged with the post.

Image 5. Operation Overload post on TikTok gaining nearly ten times more likes than views, which suggests some form of artificial amplification.

Taken together, this suggests that despite its technical sophistication and cross-platform presence, Operation Overload is delivering limited geopolitical value. Its consistent inability to drive authentic engagement or meaningfully disrupt political discourse underscores a growing disconnect between the resources invested in the operation and its strategic return.

Conclusion: is sunlight the best disinfectant?

Operation Overload is a persistent, high-volume and sophisticated campaign, but it appears to rarely influence real audiences. This is in part because platforms like X, Bluesky and TikTok have taken down many of its recent posts, and in part because few real users appear to engage with even the remaining posts.

Going forward, platforms should continue to remove the operation’s content, coordinating with each other to identify videos and images posted across multiple sites. Researchers and media outlets should continue their monitoring efforts. However, they should exercise caution in amplifying the operation’s content unless it demonstrates clear signs of genuine virality or noteworthy tactical shifts. Regular reporting on low-impact operations can inadvertently enhance their perceived influence, introduce their content to new audiences, and reduce public trust in the information environment. For operations with limited reach, approaches that prioritize disruption over exposure are key to limiting their impact.